Modern cars have been around for over a century, and are fundamental to our daily lives. Despite the duration of time that cars have been around, we have always had to be proficient in one crucial skill before using one: driving. Not anymore. There’s a huge change coming to the car industry, and it goes by the name self-driving cars.

Now you must be wondering, how do these self-driving cars work anyway? First computers learned how to play chess, and now they can drive cars??? Well, self-driving cars operate using five key components: computer vision, sensor fusion, localization, path planning, and control.

Computer Vision: The Sight Provider

Computer vision is the branch of artificial intelligence that allows computers to view the world around them. Being aware of your surroundings is crucial to driving, so computer vision is one of the main components of a self-driving car.

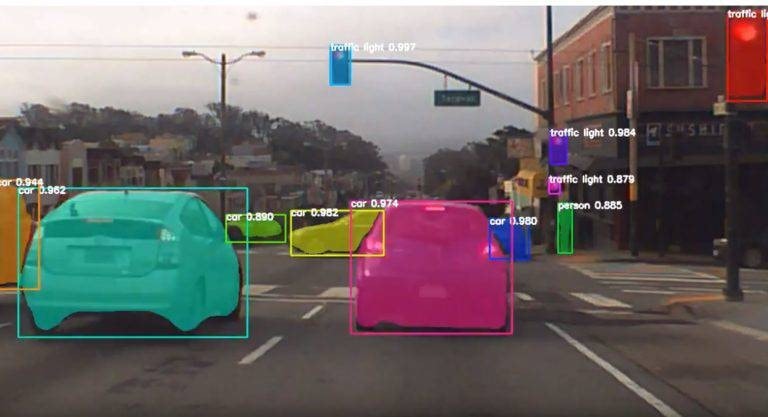

Cameras are used to receive images of the car’s surroundings, which are classified into lanes, vehicles, and other items using Convolutional Neural Networks (neural networks that process and classify images). Colors, boxes and lines are used to differentiate items as shown below:

Pretty cool, right? However, sight alone isn’t enough to make an autonomous vehicle operate properly…

Sensor Fusion: Combining the Sensors

Sensor Fusion is when data from other sensors is integrated with the data from the cameras. Incorporating the different types of data allows the car to get a better understanding of its environment, similar to the way a human uses all of their senses to get a better sense of their surroundings.

There are usually five types of sensors on a self-driving car: Ultrasonic sensors, radar, cameras, LiDAR, and GNSS. Ultrasonic sensors, radar, and LiDAR, are all used to measure the distance to an object. The difference between those sensors is that the radar usually measures the distance to a metal object, and LiDAR measures the distance between cars, as well as providing the vehicle with 360° visibility. The GNSS sensor is the sensor that gathers all the data together and allows the car to process its surroundings..

Now, moving on to the next component…

Localization: Identifying a Location

Localization is how a car identifies its location in the world. This is done using algorithms that use data from sources such as the GPS, landmark positions, and data from the sensor fusion stage. There is an initial estimate drawn from the GPS data, and then the movements of the car, landmark positions, and a combination of other data pieces help to create a final prediction of the location. The error range can be as little as 1 to 2cm.

Now, I know what you’re thinking. All of this is great and stuff, but will it still work in bad weather? The answer is yes! In inclement weather, cars can be localized using the Localizing Ground Penetrating Radar (LGPR).

After localizing, your current location is known, so all you have to do now is get to your destination!

Path Planning: Charting the Trajectory

Path planning is pretty self-explanatory. It’s the component that charts the car’s path to its destination based on the information the sensors are providing. Using data from all the sensors makes it possible for the car to predict whether it needs to slow down, accelerate, change lanes, or stop altogether.

Another task done in the path planning stage is predicting the movements of vehicles in close proximity to avoid collisions. After the movements are predicted, the car decides which action is safe to make.

Finally, the most exciting component of all…

Control: Eliminating the Need for Human Assistance

Control is the component that makes it possible for the vehicle to execute the course created in the path planning stage. It handles all the parts of driving that a human normally would, like turning the steering wheel, or hitting the brakes, etc.

This is where autonomous vehicles have an advantage over classical cars: they’re a lot more precise than a car operated by a human driver. This extra precision is what can make self-driving cars safer than classic cars. Most car accidents are caused by human error, so if the human is taken out of the equation by autonomous vehicles, there will be a lot fewer accidents.

Key Takeaways

- The five components essential to an autonomous vehicle are computer vision, sensor fusion, localization, path planning, and control

- Computer vision is powered by cameras and allows computers to see

- Sensor fusion is combining data from other sensors with data from the cameras

- Sensor fusion often provides measurement data (e.g. the distance between cars)

- Localization makes it possible for a car to identify its location in the world

- Path planning allows the car to plan a trajectory that will lead to the desired destination

- Control handles the tasks a human usually would (e.g. turning the steering wheel) and executes the path made in the path planning stage

With the arrival of self-driving cars, transportation of the 21st century is receiving a much-needed addition. These cars will make the roads a lot safer than they are today. Leave a comment below letting me know your opinion on self-driving cars! Do you think they’re an improvement that will make our daily lives easier, or are you still more comfortable with classic cars?

Thanks for sharing. Enjoyed reading this.

I don’t enjoy driving my car long distances, so when self-driving cars are released, it will be good for me. Great article!