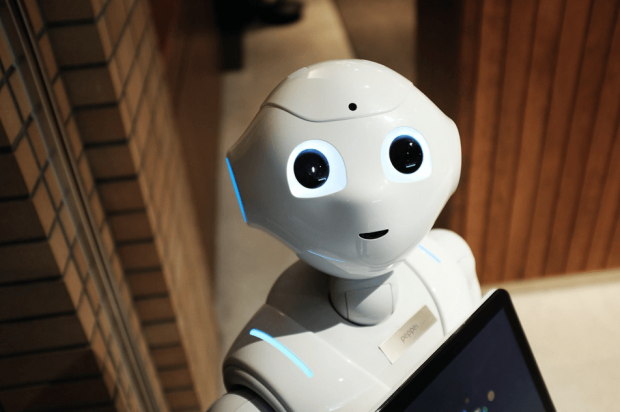

New technology often presents exciting possibilities. Whether there’s potential for new discoveries about our universe, or it simply makes our day-to-day lives a little easier, we’re always keen to explore it. The rise of artificial intelligence (AI) has been a fascinating example of how complex technological concepts can affect the minutiae of our lives.

However, as is often the case, the technology seems to have developed far more quickly than our understanding of how it fits into society. While Asimovian fiction has made us savvy to the apocalyptic extremes that AI could present, we haven’t really been prepared for the banal reality. As such, we’re struggling to catch up with the ethical and legal ramifications of domestic life that is enhanced by machine learning.

We’ve reached a point wherein AI has made its mark on multiple industries, from our health services to entertainment, to even our own homes. So what aspects of our AI usage continue to be problematic? How can businesses integrate machine learning solutions while remaining on the right side of laws that haven’t yet been written?

Swift Technological Growth

AI has been a problem that we’ve been prodding at for decades, but over the last several years we have seen an unprecedented acceleration in its evolution. One contributor to this is the jump in the number of businesses and institutions that have contributed to research and development. Much like the nature of machine learning, the more data this technology has been fed, the swifter and more complex its maturity has been.

There has perhaps been an element of technological determinism at play, too: the idea that technology will advance almost of its own accord. In this case, researchers found that microprocessors and general CPUs weren’t designed to handle the workloads demanded by AI. As a result, they started using graphics processing units (GPUs) more often utilized in gaming PCs. This, in turn, sped up the advance of machine learning, which is also leading to the development of more advanced processors. Industries took notice of the possibilities, which in turn drove demand for further development.

This development has come in a variety of forms, too — some of them unexpected. There have been dedicated AI projects, such as IBM’s Watson supercomputer, which has also been utilized by third parties to advance industry-specific analytics technology. We have also seen AI growing through the development of personal, interconnected devices through the internet of things (IoT), driven by consumer demand. The result has been a rise in practical, affordable AI technology before there has really been any serious consideration into how these individual industries might be using it. Technology has far outpaced ethics, security, and legislation.

Adoption of AI Applications

When it comes to considering the ramifications of AI, we’ve often focused on big-picture issues. Will thinking machines replace human workers? At what point does AI become sentience? Yet, the more immediate challenges we face are with regard to how AI will transform our industries, how it affects our individual lives, and what impact it might have on infrastructure at a working level.

The finance sector has been an early adopter of machine thinking. It uses technology to analyze data, in order to make investment and stock market trading decisions. A 2017 report found there may be regulatory issues that the industry isn’t prepared for, that computer-generator investment algorithms may defy auditing, and make decisions that humans can’t explain or understand. In education, AI is being utilized for personalized learning plans. Analyzing students’ work to recommend real-time adjustments to their curriculum accordingly. Yet, there is no regulation currently in place governing the limits of how this should be used, or how closely teachers should follow recommendations.

Multiple industries have fully embraced AI but have not been able to see the potential consequences until after its use has been demonstrated, and are scrambling to take adequate precautions. This perfectly illustrates the two-fold issues at play in the Collingridge Dilemma. It is difficult to control the use of technology once it becomes entrenched, yet the impact can’t usually be predicted until there is sufficient information available. Like AI itself, we can only make useful predictions when supplied with sufficient quality data.

The Precautionary Principle

It is generally accepted that the most effective solution to the Collingridge Dilemma is known as the Precautionary Principle: that technology should not be embraced until such time as its developers can demonstrate that it no longer poses harm to individuals, groups, or society as a whole. It also requires that there is a social responsibility to cease usage of a technology that is already in place until its harmful potential has been eliminated.

However, the application of this principle is not always practical. When it comes to AI, usage is nuanced dependent upon industries, and with the pace of technological adoption far outpaces our legislative system. Perhaps a more appropriate solution lies in initial extensions of industry regulations that currently exist while preparing for more specific regulations to be put in place.

For example, in healthcare, AI has become a positive influence in managing patients’ data and making treatment more efficient by basing diagnoses on analysis. There is a need to address ethical and legal concerns that are present regarding patient privacy, accountability in cases of negligence, and potential biases based on the data fed to thinking machines. The FDA has already begun to produce guidelines regarding the use of AI in healthcare. This informed, industry-specific application of independent regulatory bodies may offer a solution in other sectors.

While we are keen to embrace new technology, it is also important to balance this with a responsible approach to the risks. Of course, the Collingridge Dilemma demonstrates the difficulty in applying effective protection without being in possession of sufficient data. As AI becomes more present in our everyday lives, there is a need to regularly assess the ethical and legal ramifications, and for industry regulatory bodies to make informed decisions about potential applications of appropriate guidelines.

Hi!

Do you accept quest posts?