It’s only been about two months since GPT-3 was released by OpenAI, an artificial intelligence (AI) research organization backed by Elon Musk. However, GPT-3 has since taken the technology industry by storm. Social media has been flooded with numerous examples of writing authored by the technology, including articles, essays, and even code. In this article, I’ll provide a breakdown of this ground-breaking technology, so read on!

What is GPT-3?

GPT stands for generative pre-trained transformer, and the particular model that was released is the third of its kind. GPT-3 is a task-agnostic, natural language processing (NLP) model that requires very little fine-tuning. This means that the model only needs to be trained once to be able to complete a variety of tasks. Furthermore, it only needs to be given one or a few examples of the task that the model is supposed to complete in order for it to finish the task.

As mentioned earlier, the model itself is able to complete a variety of tasks, including generating long-form text, along with other tasks without being adjusted very much.

The model can create original long-form text, such as an essay or article, in less than 10 seconds, given a one sentence prompt. The best essays written by the model fooled 88% of people into believing that they were written by humans. How cool is that?!

At this point, you must be wondering how this technology works. Let me explain…

How does GPT-3 Work?

GPT-3 works like a transformer neural network. It is trained with extensive amounts of data, from which it identifies patterns and teaches itself to write similarly to humans.

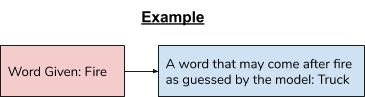

It’s trained by being given a word, and then guessing the words that may appear around it. This process is repeated billions and trillions of times. The more that the model writes correctly, the more correct writing patterns and rules will be reinforced in it. Additionally, the more times that the model trains itself like this, the more patterns it’ll recognize, which are converted into parameters.

Parameters are the values that a neural network attempts to optimize during training. In the case of GPT-3, the parameters are different rules of writing.

To understand how extensively the GPT-3 model was trained, here are some statistics:

- The entire English Wikipedia is just 0.6% of the model’s training data.

- It was trained on approximately 500 billion words, which generated 175 billion parameters!!

- The computing power required to process the data used to train the model cost $12 million.

Being trained on so much data is one of the reasons that the model is so significant. Let me explain…

Why is GPT-3 a Significant Advancement?

There are three main reasons that GPT-3 is so significant:

- It only needs a few demonstrations of how to complete a task before being able to finish it, and sometimes, it does not require any demonstrations!

- As a result of being trained on such vast amounts of data, the model is more accurate than other models at the tasks that it completes.

- The GPT-3 model is unique because it is task-agnostic without requiring a lot of fine-tuning.

a. Other models can either do a variety of tasks, but need to be fine-tuned, or don’t require tuning, but perform much more specific tasks.

This allows GPT-3 to have many real-world applications!

Applications of GPT-3

Chatbots

One of the most unique chatbots powered by GPT-3 is one that allows people to talk with famous figures (even fictional ones). As a result of being trained using lots of text from the internet, including digitized books, the model learned the way that specific figures think. This has allowed it to have conversations with users while replying using a method of thinking specific to a famous figure, such as Elon Musk, Shakespeare, and Aristotle.

Legal Jargon Translator

One use of GPT-3 allowed a user to input plain text, with the output being the text translated into legal language. This can also be used vice versa, to translate legal jargon into comprehensible english.

Writing Code

Finally, GPT-3 has also been used to write code for design elements based on a description. Although it’s not perfect, it is still shockingly good!

The Cons of GPT-3

Being as powerful as it is, GPT-3 still has some cons associated with it, which are some of the reasons that the model can only be accessed through a private API. These cons include:

- The model is biased and not politically correct.

- The model could possibly be used to generate text that is propaganda or fake news.

This demonstrates that even if the model is accurate, it still needs to be improved upon to ensure that human biases do not influence its performance. Furthermore, if in the wrong hands, it can cause a lot of damage.

Key Takeaways

- GPT-3 is a task-agnostic, natural language processing (NLP) model that requires very little fine-tuning.

- It’s trained by being given a word, and then guessing the words that may appear around it.

- Some applications of GPT-3 include generating long-form text, such as essays, or powering chatbots, legal jargon translators, and generating code.

- Some of the cons of the model include not being politically correct, as well as possibly being used to generate text that is propaganda or fake news.

With the arrival of GPT-3, it’s certain that there are extraordinary advancements occurring in the field of AI. However, there are still many improvements to be made to ensure that the biases that humans have are not ingrained into AI models.

Leave a comment down below regarding your views on GPT-3! Are you excited by this new advancement in AI, or do you believe it’s overhyped?

Wow, gpt-3 is really cool!

Yes, I totally agree! I can’t wait to see future applications of this technology.

This was a good read. In the future, I believe it is inevitable that we will see works of fiction and literature being created by technology like this. However, many people may still want to know that the words they are reading in a book have come from a real person that has experienced (at least to some extent) the same emotions being written about, rather than from a machine. Nevertheless, still cool.

Thank you for reading, Aman! I agree with you, I believe there will still be a demand for human writers in the future, even as this technology advances. Furthermore, it still has a long way to go to reach the capabilities of a human author without displaying biases.