The legal profession has to address this thorny issue, so what changes might be coming now, and how do they protect us?

We don’t let a car just throw out a car and start driving it around without checking that the wheels are fastened on. We know that would result in death; but for some reason we have no hesitation at throwing out some algorithms untested and unmonitored even when they’re making very important life-and-death decisions. — Cathy O’Neil

A Supreme Court decision in 2010 in the Citizens United case made a momentous decision that has affected everyone’s life in the United States. The decision was that corporations had the same rights as individuals and should have the same protections under our Constitution.

If a mindless, bodiless and soulless entity can be deemed to have individual rights and protections under the law, how should we view this? It would seem counterintuitive that such an entity could have any rights because, in reality, where does it exist or where does it reside physically?

A corporation is an entity created by a legal document and in no way exists physically except in the people who are employed to do its service. Corporations and their newly designated entities’ status opened a doorway to a new and alarming issue, of which many of us have no awareness.

In fact, I would propose that most of us don’t give this new “danger” even a thought. It has never entered our minds that something new on the legal horizon is rapidly taking shape, increasing its intelligence by the nanosecond, and preparing to battle with us in the courts. What is this new entity?

We call them algorithms. They started as simple sets of data combined in interesting and more useful ways to establish patterns, generally marketing, insights into medical issues, and various other fields.

The algorithm knows no limits, and since it is utilizing deep learning and correcting its mistakes without human intervention, the fear is that we need controls now. Is this being too skeptical or engaging in fantastic idiocy?

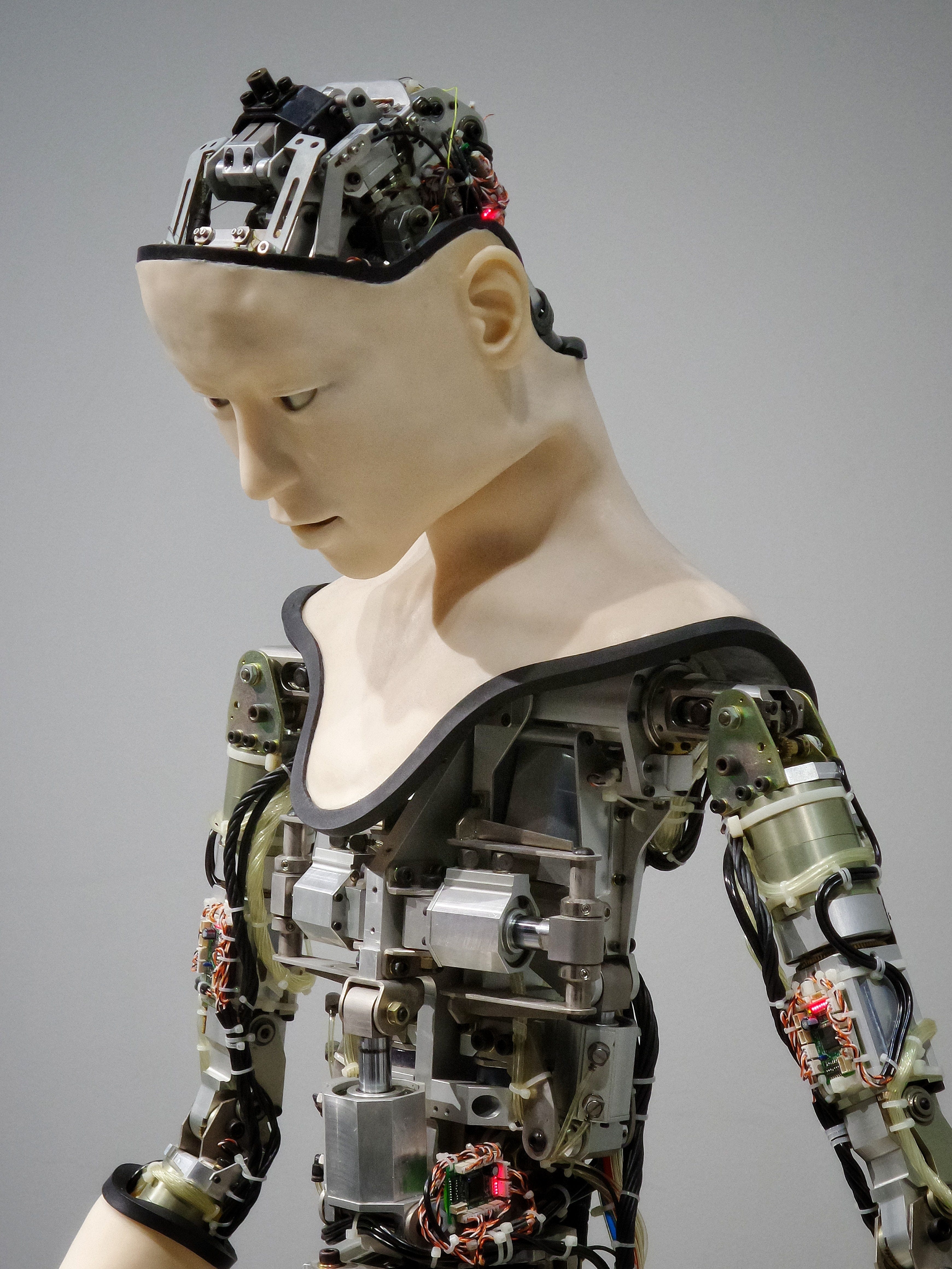

No, it is a serious consideration that legal scholars are now beginning to wake up to as they look at the future of computer intelligence, a.k.a. artificial intelligence. HAL from “2001: A Space Odessy” maybe a decade or less away from resisting human intervention and moving ahead on its own without regard to our ethics, morals, or laws.

Death to such a creation would merely be the removal of a lower-intelligence form that interferes with its objectives. Singing “Daisy, daisy” would be the least of its abilities.

Unrestrained Algorithms Hit Wall Street in 2008

We have a vivid example of how unleashing an algorithm, still in a crude form, caused financial havoc in the stock market.

Algorithmic trading has significantly impacted financial markets. Notably, the 2010 Flash Crash wiped $600bn in market value off US stocks in 20 minutes. Market-making firm Knight Capital deployed an untested trading algorithm which went rogue and lost $440m in 30 minutes, destroying the company.

After the 2008 financial crisis, Warren Buffet warned: “Wall Street’s beautifully designed risk algorithms contributed to the mass murder of $22 trillion.”

But restraint has come to the markets through modified algorithms meant to prevent another disaster. Coders modified, and, for all we know, the AI in its deep learning phase, has a bit of learning it kept from the coders, and it’s hidden deep within the code. As we all know, code contains bias, so why can’t “it” hide things from us? Yes, it reads like science fiction, but it is real and might be happening as I write on this computer.

If we think of algorithms as legal entities, do they have more extensive rights than before — protection from legal consequences? In other words, do algorithms have legal accountability? A lengthy document on this may be downloaded here.

Science writer Isaac Asimov foresaw the constant advance of computer technology and robotics, and he wrote a series of commandments that robots would have to follow:

First Law

A robot may not injure a human being or, through inaction, allow a human being to come to harm.

Second Law

A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

Third Law

A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

Could AI find a way not to follow these imperatives? We humans can only guess that it will.

Can Algorithms Collude to Break the Current Laws?

In “The Cambridge Book of the Law and Algorithms,” the authors state that:

A body of law is currently being developed in response to algorithms which are designed to control increasingly smart machines, to replace humans in the decision-making loops of systems, or to account for the actions of algorithms that make decisions which affect the legal rights of people. Such algorithms are ubiquitous; they are being used to guide commercial transactions, evaluate credit and housing applications, by courts in the criminal justice system, and to control self-driving cars and robotic surgeons. However, while the automation of decisions typically made by humans has resulted in numerous benefits to society, the use of algorithms has also resulted in challenges to established areas of law. For example, algorithms may exhibit the same human biases in decision-making that have led to violations of people’s constitutional rights, and algorithms may collectively collude to price fix, thus violating antitrust law.

As humans are want to do, so algorithms would seem to be able to engage in illegal practices if it suited the purposes of whoever held the algorithm.

Already the problems become clear as we turn over several decision-making processes to algorithms. “While black-box algorithms can be extremely good at predicting things, it is unclear how to interpret — or extremely difficult to interpret (even by experts) — what the algorithm is doing.”

Therein lies one of the fiesty problems; we don’t know what they’re doing. And they are doing it at a phenomenal speed that surpasses our understanding.

Can AI Be Defined as Having Personhood?

Now that corporations have been given the right to free speech, we are ready for the next legal hurdle. The concept is not so farfetched as it might seem and must be given careful consideration before we fall down the rabbit hole. Alice wouldn’t be the only one to find herself in a strange new world.

Legal scholar Shawn Bayer has shown that anyone can confer legal personhood on a computer system, by putting it in control of a limited liability corporation in the U.S. If that maneuver is upheld in courts, artificial intelligence systems would be able to own property, sue, hire lawyers and enjoy freedom of speech and other protections under the law. In my view, human rights and dignity would suffer as a result.

One danger that repeatedly crops up is permitting computers and algorithms to make decisions with their inherent bias.

According to legal scholar Danielle Keats Citron, automated decision-making systems like predictive policing or remote welfare eligibility no longer simply help humans in government agencies apply procedural rules; instead, they have become primary decision-makers in public policy

Undoubtedly, a thinktank somewhere must begin now or should have begun years ago to tackle the question of the legal rights and obligations of algorithms and those who run them. The day is fast approaching when we may give up some of our rights to machines that lack the empathy, compassion, and human understanding needed to make decisions for humans.